Getting to the why behind Docker

Docker is ubiquitous

I came in that day with a plan to deploy a new micro-service in production that afternoon. Within a few operations, I had gotten most of my infrastructure stood up and just needed to make a minor tweak to my Dockerfile. Facing the end of the day and with my goal in sight, I realised just how much I took Docker and by extension, containers, for granted.

Every day, thousands of applications are deployed consistently, reliably, on any infrastructure. All thanks to Docker and containerization.

Far from the cries of “it works on my machine”, it not only works on your machine, but it works on everyone else’s, too. With a few short lines of code we can package our application and its dependencies and run it anywhere.

As the mental image of shipping containers evokes, we can similarly stack multiple containers on a single server or virtual machine. This is because containers are lightweight and isolated.

From the first demo of Docker at Pycon in March 2013 by Docker founder Solomon Hykes, Docker’s popularity has exploded.

How did we get here though?

Computers are expensive

Much like buying an expensive rig only to play Stardew Valley (this is still a solid life-choice, to be clear), we want to find ways to leverage that expensive machine of ours to run more applications, especially if we have more resources to make use of.

Of course this works best if we don’t have to worry about other flat-mates applications using all our stuff. So we want to keep them isolated and thinking they have the whole ̶p̶l̶a̶c̶e̶ server to themselves, this protects us, and them. We also want to make sure they don’t take more than their fair share of the ̶f̶o̶o̶d̶ server resources.

As a part of my job, I need to make sure that we’re not only deploying applications securely, but that ultimately, we’re able to cut down on infrastructure costs and maintenance. I am incentivised to find mechanisms that make it cheaper for me to deploy and run my applications whilst maximizing the utility of the underlying hardware.

Enter virtualisation

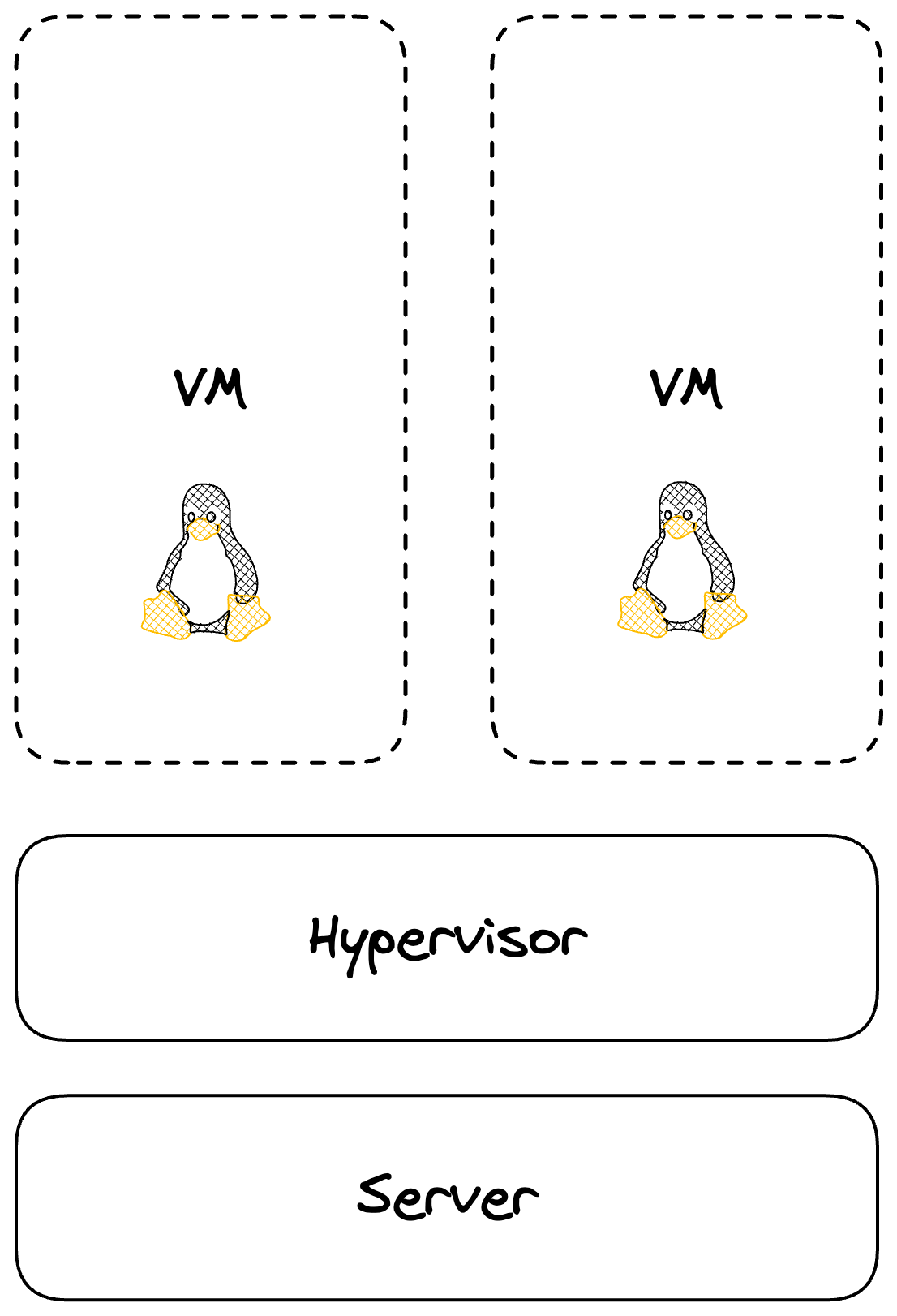

The epitome of fake it til you make it, a Virtual Machine (VM) is the software replication of real hardware architecture. CPU, Storage, Network adapters are all represented as a virtualized hardware stack and it comes with its own guest operating system (OS).

Now we have a software representation of something we’d typically think about as a physical device. Meanwhile, to utilise the resources of our underlying physical hardware, we can leverage a hypervisor as a bridge of sorts to manage the allocation of resources to our various guest VM’s.

Virtual Machines on physical hardware via a Hypervisor

This now allows us to deploy isolated applications on top of full OS’s and gives us better bang for the buck on our hardware.

There are of course some downsides.

We can’t dynamically adjust our compute and/or memory needs, which hurts our ability to respond to changes in workload.

We have a larger resource overhead as a result of running a full operating system in each virtual machine. We incur the cost of this overhead for every VM on the hardware.

We can struggle with portability (moving VM’s from one machine to another).

We’ve been able to accomplish our goal of leveraging more out of our hardware, and we’ve got some great isolation going, but we’re not particularly efficient about how we allocate those resources, and change is hard.

We can do better, and we did.

Back to the future

Psst. Want a spoiler as to why Docker is so successful? It “just” provided a nice abstraction layer over work already done in Linux, that, along with images and layers, has ultimately enabled it to be as ubiquitous as it’s become.

So what was that work?

Well, it turns out Linux was already starting to build the tools for containerization, enabling the small blast radius for changes and security benefits we’ve come to enjoy and eventually leading to the Open Container Initiative (OCI). Docker is just one implementation of the OCI specification.

Two areas that made this possible were Namespaces and Control Groups.

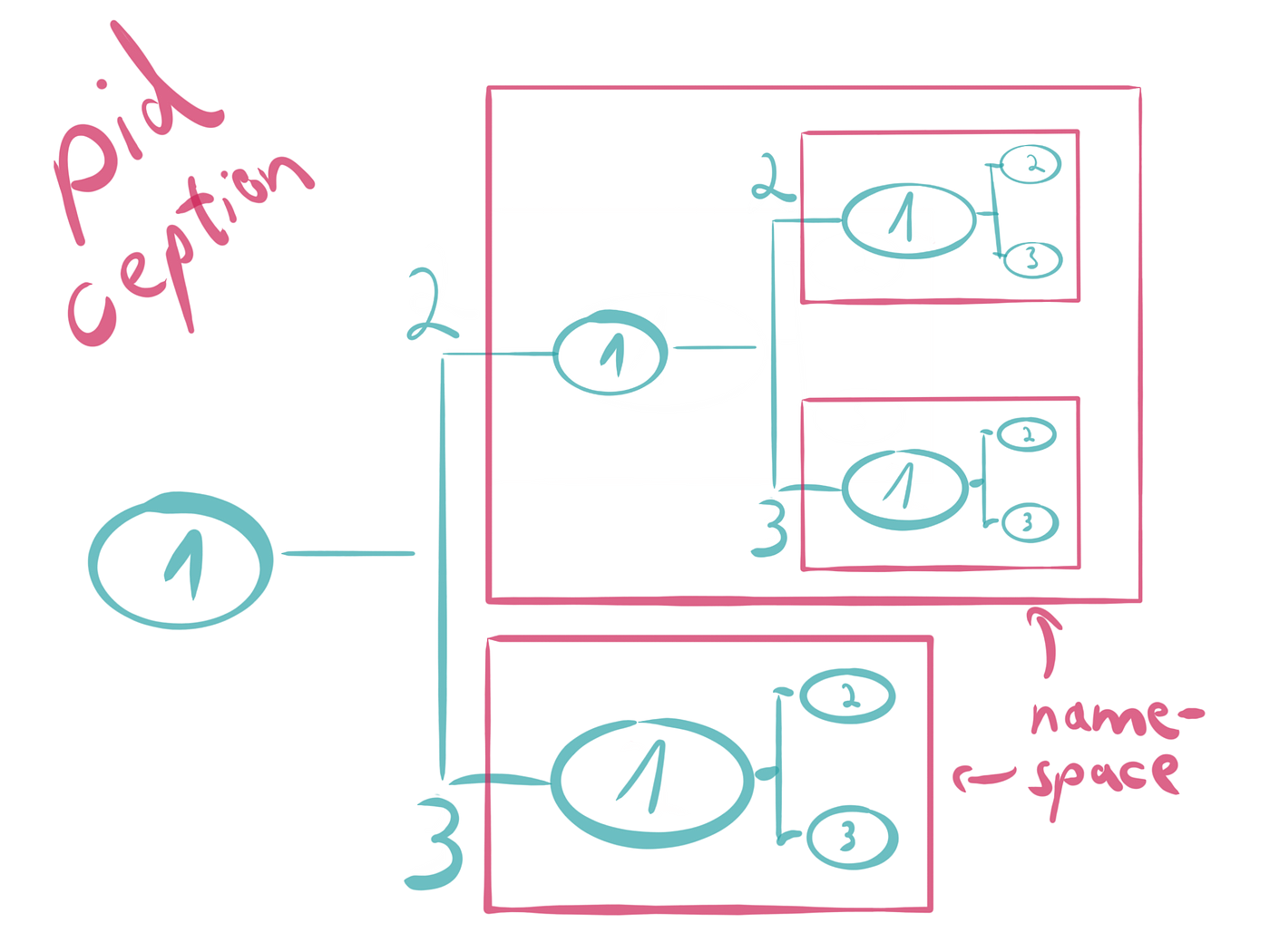

Know how we want to keep our application isolated? Namespaces allow us to isolate processes whilst still using the same kernel as the host. This means we get all the isolation, with very little overhead in comparison.

Isolated processes per namespace credit: https://medium.com/@saschagrunert/demystifying-containers-part-i-kernel-space-2c53d6979504

Before, if we wanted isolation, we had to spin up a full operating system, now we can just make use of the features the operating system already has to give us that same isolation.

Control Groups (cgroups) are what enables us to support the management of our hardware resources for our container process. Enabling us to respond to the changing requirements of our application.

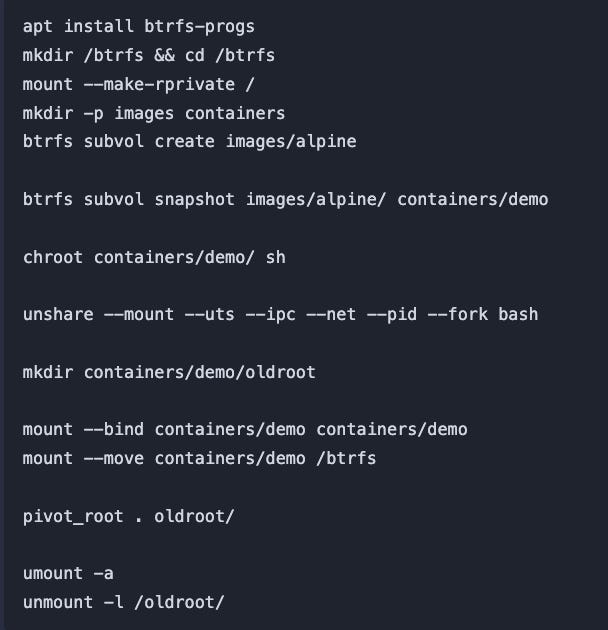

This is great! However if I want to create my own container in Linux without using Docker or one of the various open-source tools that meet the OCI spec, I need to run a bunch of Linux commands. This is tiring and error-prone and I haven’t even gotten to the point yet where I can start setting up everything my application needs to run.

All aboard with Docker

We have a drawer filled to the brim with plastic snack containers.

Every day for the kids lunches, I used to have to scramble to find the right lids and containers to organise the kids lunch. Then find ways to stuff it in their bag.

Was it more convenient and resource-efficient than plastic-wrap?

Yes.

Was it still a pain?

Abso-freaking-lutely.

Until we got these amazing bento lunch boxes. Instead of spending up to 30 minutes just trying to find the missing lids, I could now just focus on organizing lunch.

Docker made it easy for developers to focus on their applications, by making the container more convenient.

It abstracted away a lot of the boilerplate that you saw above, simplifying the process of container creation and added 2 big improvements — images, and layers.

How simple?

# Start your image with a node base image

FROM node:18-alpine

# The /app directory should act as the main application directory

WORKDIR /app

# Copy the app package and package-lock.json file

COPY package*.json ./

# Copy local directories to the current local directory of our docker image (/app)

COPY ./src ./src

COPY ./public ./public

# Install node packages, install serve, build the app, and remove dependencies at the end

RUN npm install \

&& npm install -g serve \

&& npm run build \

&& rm -fr node_modules

EXPOSE 3000

# Start the app using serve command

CMD [ "serve", "-s", "build" ]

(This simple Dockerfile example from Docker’s own welcome to docker repo)

Unlike our boilerplate code that we just scratched the surface of earlier, we can focus much more now on just what we need to run our application as opposed to setting up the container itself.

One of my colleagues was famously fixated on ensuring that you could come in the morning with an idea for a service, and leave in the afternoon with the skeleton of one in production, so you could get on with the act of building value.

Thanks to Docker, we’re able to do a lot of the work to get us to that outcome and deliver value, sooner.

Docker has a couple of other tricks up its sleeve, though that have helped to make it ubiquitous.

Building containers in our own image

Remember our simple Dockerfile from earlier? When we execute those commands, we build an image, and when we run that image, we get our container.

Let's see this in action, start by cloning the Welcome to Docker repo and navigate to it's directory.

Now if we run docker build -t welcome-to-docker . we can watch as our Dockerfile and the files and folders in the repo are used to build a Docker image, tagged with welcome-to-docker

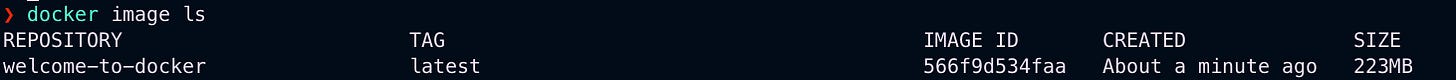

We can observe the image by running docker image ls where we should see something like this:

The image we’ve just built from our Dockerfile is effectively a read-only template of instructions on how to configure our container environment.

Images help to make Docker so useful, think of them as factories for your applications, and what’s more, we can distribute and share them through registries like DockerHub.

Want a Ruby terminal without needing to install Ruby? You got it.

Want an incredibly compact Linux distribution? Say no more, friend.

Want to use an image to build other images? Absolutely.

You can share and host images wherever and whenever you want, and thanks to the Open Container Initiative (OCI), there’s a standard specification for those same images, so one made with Docker can be used with other runtimes like Podman.

This ultimately makes it faster for us to build, deploy, and run our software especially when we start to employ techniques to make the file size of that image as small as possible.

Docker and containers in general, enable me, as a an engineer to deliver value to customers and get feedback on my product, faster.

All I need to do is take that image now and execute docker run -d -p 8088:3000 --name welcome-to-docker welcome-to-docker

This command will go ahead and start our container, give it a name welcome-to-docker and use the image welcome-to-docker, all as a background process.

Easy, right?

For bonus points go ahead and navigate to

http://localhost:8088

in your browser.

🎉

There’s just so many layers to Docker

So far we've looked at what problems containers solve, how to build an image, and how to run a container. We've already unlocked a tonne of value for ourselves and our customers with how easy it is for us to deploy our containerized applications compared to continuing to leverage virtual machines.

Much like the best knife salesperson on TV, though, wait, there's more.

By running docker image inspect welcome-to-docker we should see something like the following in the output

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:3ce819cc49704a39ce4614b73a325ad6efff50e1754005a2a8f17834071027dc",

"sha256:d606342d924f434be9ff199a0c396d2f4b8adc3b27f68cf6004fab67c083611d",

"sha256:682295b5673876aa6f231b5cbd440d11c4cb5e29b3f7af76b86fe92cff76b6bf",

"sha256:894d7d58d9b18ba2c9ea2700f703b3a484d8ea074308e899a1fbec6a19fdbca7",

"sha256:87548b8d745c27276ac43dc91bac3bb07a2805b2e7b0f5fd596c474ebf5c17eb",

"sha256:599d0dd3aa3615bdd739da80fa973cbb72e529b6b0e51818c6f1c72916c3aad8",

"sha256:8cbdd3c35c83d0d573622a80c4affadc4fe397bf6fa9d6471d1e5b4697b70017",

"sha256:4cd1674d373d7b85aeb1bd0ab69bf90ac05f780588db07d7fac8c66bef17548a",

"sha256:b4ab1336b97eccebbded36ce4b8a4450dd56b2dadcfccae572e62a0d0863f5c9"

]

},

Every command we ran in our Dockerfile became a read only layer, this combined with utilising a union file system means we can overlay our layers, to form a singular file system.

What this ultimately means for us is not only the file system that we see in our container, but with our read only layers we can use them as a cache for later builds for our image and other images.

When we change one of the layers, we will trigger another build of the layer, which is why we want to keep the layers that change the least towards the top of the file, and the layers that change the most towards the bottom as changes in one layer, can invalidate itself and all other child layers. This enables us to ensure that we only build what we need, when we need to which saves us time, and resources.

In later posts - we can take a look at how to make this even more efficient and reduce the ultimate size of our images by combining some our commands and deleting our dependencies as we go when we no longer need them.

Where would we be without Docker?

Whilst Docker isn't the only OCI compliant containerization platform, a lot of what Docker did has helped to make so much easier to containerize and deploy, our applications every day.

Its impact on my ability to ship value to our customers enables the fast feedback loops that help us continue to iterate and improve.

Resources and (better) reads

All of these articles were incredibly useful and insightful references throughout the creation of this article and will provide a far more comprehensive understanding.

The Docker Handbook a great comprehensive rundown on Docker and containers

How Docker Layers Work - a fantastic YouTube video that deep dives into how layers and layer caching work.

Demystifying Containers - a fantastic article that looks into a lot of the technology behind containerization in Linux in far more depth than I've gone to here.